Gus Xia is a computer science expert and assistant professor at NYU Shanghai; he is also a professional musician. Professor Xia--who has performed as the prime soloist of the Chinese Music Institute in Peking University, and a soloist with the Pitt Carpathian Ensemble in Pittsburgh--is using his passion for music to expand our understanding of the dynamics between humans and machines. He tells us about his latest work and whether AI can ever replace a human musician.

You trained as a traditional musician, playing the Chinese flute. What sparked your interest in machine learning and computer music?

It’s actually a very simple story of an undergrad wanting to combine both his interest and major. While doing my undergrad at Peking University, I wanted to seek an opportunity to combine my studies in information science with my passion for music. Luckily, my music teacher at the time was familiar with computer music, so I was able to dive right in. I was also a professional Chinese flute player for 10 years before I pursued a Ph.D. in Machine Learning at Carnegie Mellon University.

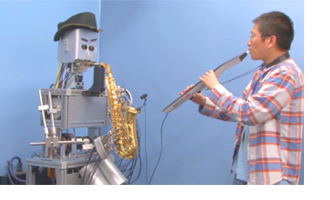

Your current projects explore interactive robot and human musical performance. Can robot performers ever be as emotionally engaging?

Your current projects explore interactive robot and human musical performance. Can robot performers ever be as emotionally engaging?

The application of AI and music will be different from say, the development of automatic cars driving on the streets. We can ascertain that in the future, automatic driving cars will definitely be more accurate and safer than human drivers, eliminating traffic jams and accidents. For music, it’s still a debate whether robots can ‘do better.’ There’s space for them to become better technical performers, but compared to working with real musicians, a robot still lack deeper interactions, unable to take music cues at the human level. Humans can just look at each other and there’s a magic going on, but robots must be programmed and we do not know how to code the magic part yet.

If I compare just working with a computer to working with an interactive robot, the interactive robot is certainly more engaging. There are studies that show that by switching from playing with a pure computer to an animated robot, people will feel more like they are working with humans. Think of a robot as a way to hack human perception of intimacy—as long as there is a moving body, especially humanoid, people tend to act as if it has a life.

Could AI be used to replace humans in music education or entertainment industries?

I think it’s really about creating a different market and space, not replacing humans. There’s already a Japanese virtual popstar, Hatsune Miku, voiced by a singing synthesizer application. She is completely fictional and digital and yet people have been going crazy about her ‘live’ solo concerts for about 10 years now. It’s a different market appealing to a target audience who might not be the same group of people who go to the Shanghai Concert Hall to appreciate Beethoven.

AI is just a tool, just like having a car. It depends on how we as humans use the tool and how we make it work for us. We can either use it to downgrade or upgrade the human mind, and building a complete dependency on computer intelligence is a problem. Einstein said: “Keep it simple but not simpler,” and I think we should be in the spirit to use technology but not become its slave.

OK then, how can Artificial Intelligence help humans better appreciate, compose or perform music?

OK then, how can Artificial Intelligence help humans better appreciate, compose or perform music?

It can help us appreciate music when we use programs like Spotify or Pandora--both use collaborative filtering to provide content-based recommendations for music listeners to discover new artists similar to what they are already listening to. There’s also software now at our fingertips like Logic X Pro, and other digital audio workstations that allow us to become more productive composers.

My current project, Haptic Guidance for Flute Tutoring, involves the performance aspect and is a software that quickly builds muscle memory and directly transfers a piece of music into finger motions so one can learn by doing and feeling to memorize the sequence. I was motivated by the fact that many people who aren’t musicians want to learn how to play a simple song but feel intimidated by the pain of memorizing all of the notations. Haptic guidance is not a substitute for an actual flute teacher, but allows the layman to quickly learn so that they can experience performance or at least serenade their daughter with a “Happy Birthday” song.

My hopes are that after this experience, people will no longer think performing with instruments is something out of their reach. It might inspire further interest or encourage them to learn and play more songs.

Professor Xia’s work includes human-computer interactive performances, autonomous dancing robots, smart music displays, and large-scale content-based music retrieval.